This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: What's New ✨

- 2: Overview

- 2.1: Benchmark

- 3: Operating

- 3.1: Setup

- 3.1.1: Installation

- 3.1.2: OpenShift

- 3.1.3: Rancher

- 3.1.4: Managed Kubernetes

- 3.1.5: Controller Options

- 3.2: Admission Policies

- 3.3: Architecture

- 3.4: Best Practices

- 3.4.1: Workloads

- 3.4.2: Networking

- 3.4.3: Container Images

- 3.5: Authentication

- 3.6: Monitoring

- 3.7: Backup & Restore

- 3.8: Troubleshooting

- 4: Tenants

- 4.1: Quickstart

- 4.2: Namespaces

- 4.3: Permissions

- 4.4: Quotas

- 4.5: Enforcement

- 4.6: Metadata

- 4.7: Administration

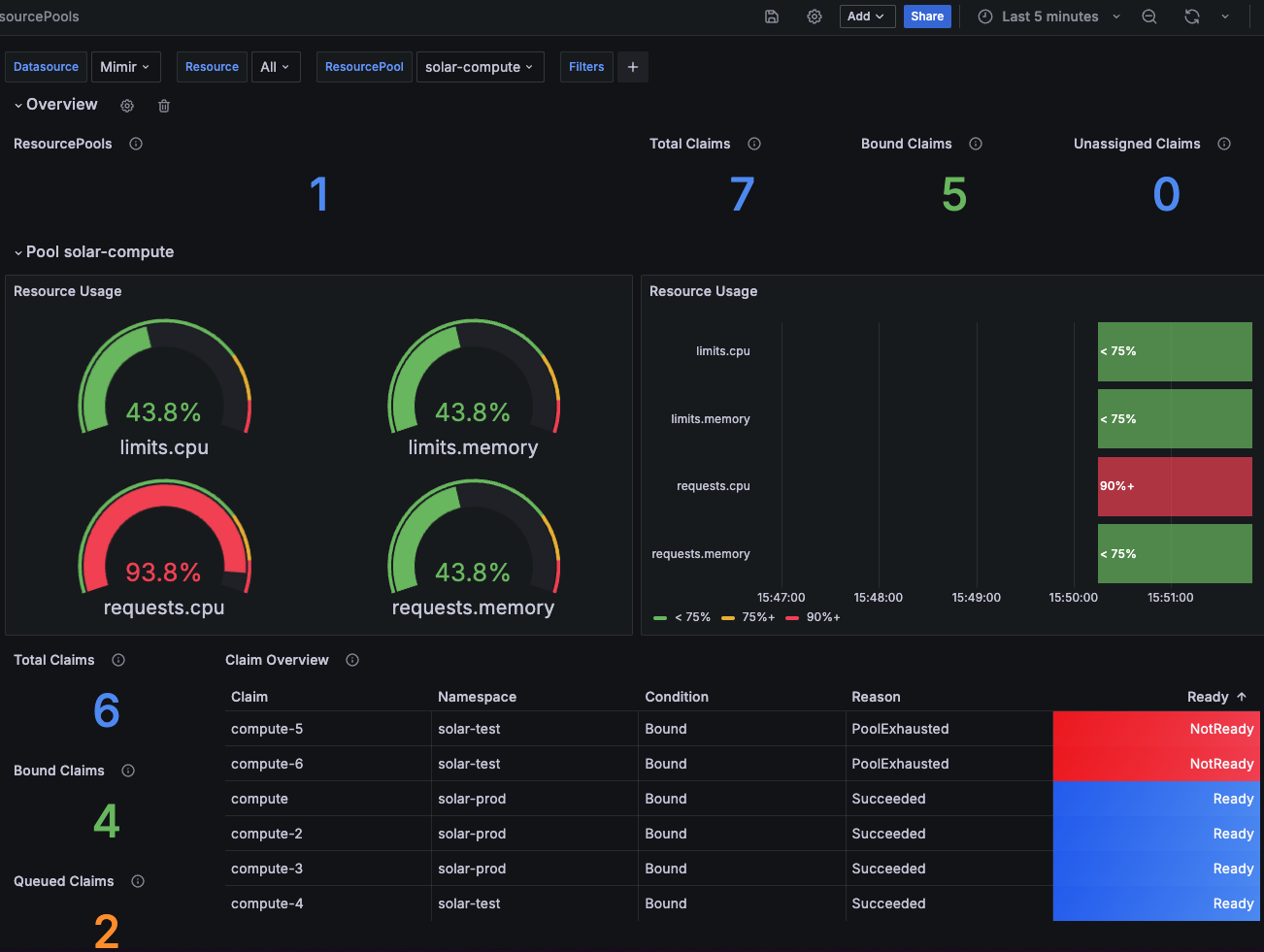

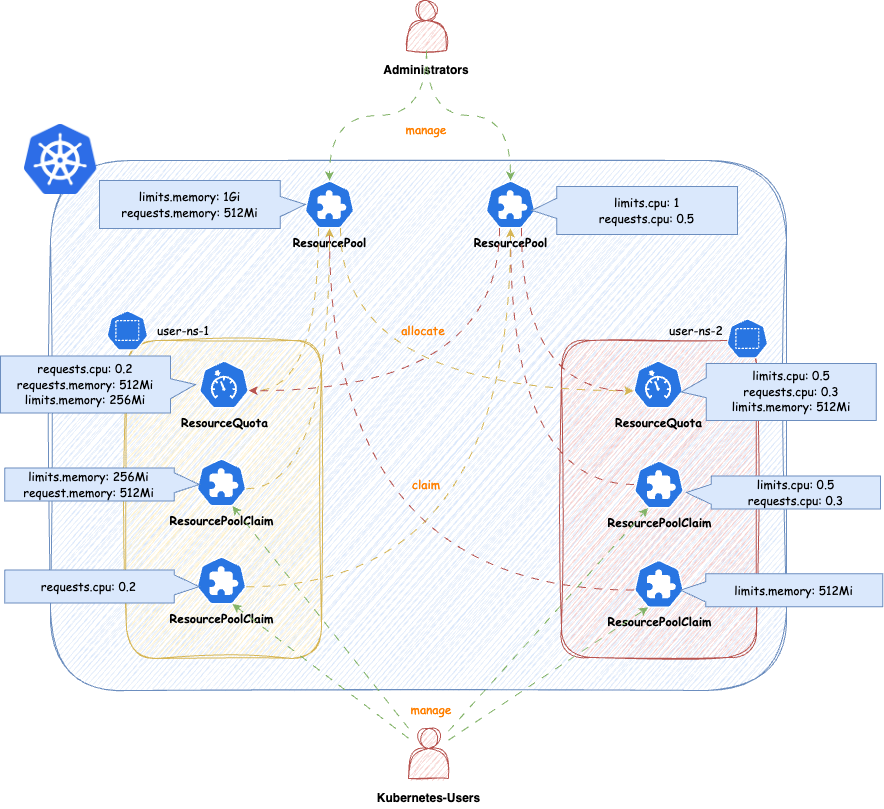

- 5: Resource Pools

- 6: Replications

- 7: Proxy

- 7.1: Installation

- 7.2: ProxySettings

- 7.3: Controller Options

- 7.4: API Reference

- 8: Guides

- 9: API Reference

1 - What's New ✨

Features

Admission Webhooks return warnings for deprecated fields in Capsule resources. You are encouraged to update your resources accordingly.

Added

--enable-pprofflag to enable pprof endpoint for profiling Capsule controller performance. Not recommended for production environments. Read More.Added

--workersflag to define theMaxConcurrentReconcilesfor relevant controllers Read More.Combined Capsule Users Configuration for defining all users and groups which should be considered for Capsule tenancy. This simplifies the configuration and avoids confusion between users and groups. Read More

All namespaced items, which belong to a Capsule Tenant, are now labeled with the Tenant name (eg.

capsule.clastix.io/tenant: solar). This allows easier filtering and querying of resources belonging to a specific Tenant or Namespace. Note: This happens at admission, not in the background. If you want your existing resources to be labeled, you need to reapply them or patch them manually to get the labels added.Delegate Administrators for capsule tenants. Administrators have full control (ownership) over all tenants and their namespaces. Read More

Added Dynamic Resource Allocation (DRA) support. Administrators can now assign allowed DeviceClasses to tenant owners. Read More

All available Classes for a tenant (StorageClasses, GatewayClasses, RuntimeClasses, PriorityClasses, DeviceClasses) are now reported in the Tenant Status. These values can be used by Admission to integrate other resources validation or by external systems for reporting purposes (Example).

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: solar

...

status:

classes:

priority:

- system-cluster-critical

- system-node-critical

runtime:

- customer-containerd

- customer-runu

- customer-virt

- default-runtime

- disallowed

- legacy

storage:

- standard

- All available Owners for a tenant are now reported in the Tenant Status. This allows external systems to query the Tenant resource for its owners instead of querying the RBAC system.

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: solar

...

status:

owners:

- clusterRoles:

- admin

- capsule-namespace-deleter

kind: Group

name: oidc:org:devops:a

- clusterRoles:

- admin

- capsule-namespace-deleter

- mega-admin

- controller

kind: ServiceAccount

name: system:serviceaccount:capsule:controller

- clusterRoles:

- admin

- capsule-namespace-deleter

kind: User

name: alice

- Introduction of the

TenantOwnerCRD. Read More

apiVersion: capsule.clastix.io/v1beta2

kind: TenantOwner

metadata:

labels:

team: devops

name: devops

spec:

kind: Group

name: "oidc:org:devops:a"

clusterRoles:

- "mega-admin"

- "controller"

Fixes

Admission Webhooks for namespaces had certain dependencies on the first reconcile of a tenant (namespace being allocated to this tenant). This bug has been fixed and now namespaces are correctly assigned to the tenant (at admission) even if the tenant has not yet been reconciled.

The entire core package and admission webhooks have been majorly refactored to improve maintainability and extensibility of Capsule.

Documentation

We have added new documentation for a better experience. See the following Topics:

Ecosystem

Newly added documentation to integrate Capsule with other applications:

2 - Overview

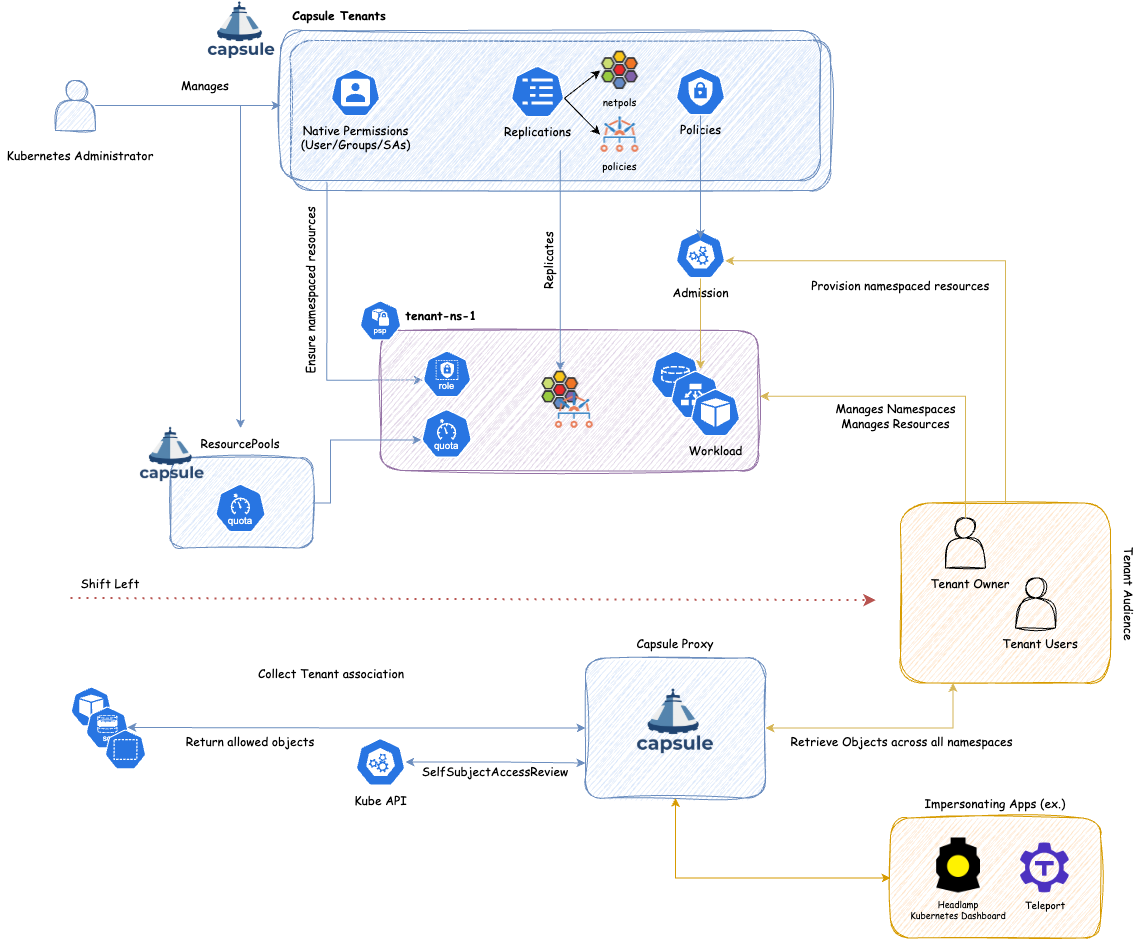

Capsule implements a multi-tenant, policy-based environment in your Kubernetes cluster. It is designed as a microservices-based ecosystem with a minimalist approach, leveraging only upstream Kubernetes.

With Capsule, you have an ecosystem that addresses the challenges of hosting multiple parties on a shared Kubernetes cluster. Let’s look at a typical scenario for using Capsule.

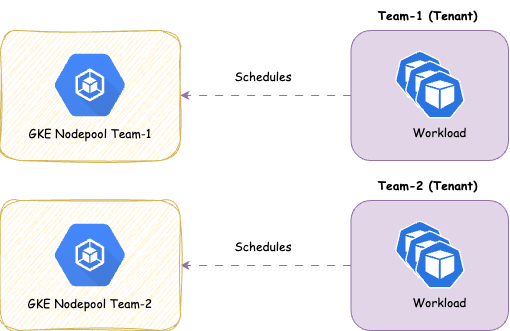

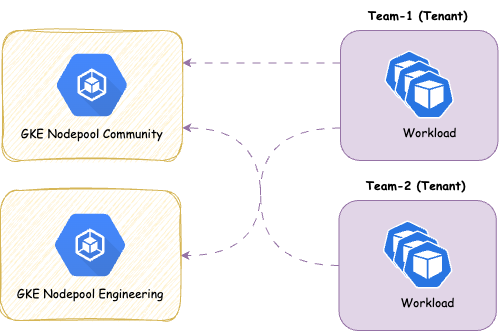

As shown, we can create a new boundary between Kubernetes (cluster) administrators and tenant audiences. While Kubernetes administrators define the boundaries of a tenant, the tenant audience can act within the namespaces of that tenant. For the tenant audience, we differentiate between Tenant Owners and Tenant Users. The main advantage Tenant Owners are granted is the ability to create namespaces within the tenants they own. This achieves a shift-left approach: instead of depending on Kubernetes administrators to create namespaces, Tenant Owners can manage this themselves, thereby granting them greater autonomy within strictly defined boundaries.

What’s the problem with the current status?

Kubernetes introduces the Namespace object type to create logical partitions of the cluster as isolated slices. However, when implementing advanced multi-tenancy scenarios, this soon becomes complicated because of the flat structure of Kubernetes namespaces and the impossibility of sharing resources among namespaces belonging to the same tenant. To overcome this, cluster admins tend to provision a dedicated cluster for each group of users, teams, or departments. As an organization grows, the number of clusters to manage and keep aligned becomes an operational nightmare, described as the well-known phenomenon of cluster sprawl.

Entering Capsule

Capsule takes a different approach. In a single cluster, the Capsule Controller aggregates multiple namespaces in a lightweight abstraction called a Tenant, which is basically a grouping of Kubernetes namespaces. Within each tenant, users are free to create their namespaces and share all the assigned resources.

On the other side, the Capsule Policy Engine keeps the different tenants isolated from each other. Network and security policies, resource quotas, limit ranges, RBAC, and other policies defined at the tenant level are automatically inherited by all the namespaces in the tenant. Users are then free to operate their tenants autonomously, without intervention from the cluster administrator.

What problems are out of scope

Capsule does not aim to solve the following problems:

- Handling of Custom Resource Definition management. Capsule does not aim to manage the control of Custom Resource Definition. Users have to implement their own solution.

2.1 - Benchmark

The Multi-Tenancy Benchmark is a WG (Working Group) committed to achieving multi-tenancy in Kubernetes.

The Benchmarks are guidelines that validate if a Kubernetes cluster is properly configured for multi-tenancy.

Capsule is an open source multi-tenancy operator, we decided to meet the requirements of MTB. although at the time of writing, it’s in development and not ready for usage. Strictly speaking, we do not claim official conformance to MTB, but just to adhere to the multi-tenancy requirements and best practices promoted by MTB.

Allow self-service management of Network Policies

Profile Applicability: L2

Type: Behavioral

Category: Self-Service Operations

Description: Tenants should be able to perform self-service operations by creating their own network policies in their namespaces.

Rationale: Enables self-service management of network-policies.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

networkPolicies:

items:

- ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: oil

podSelector: {}

policyTypes:

- Egress

- Ingress

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, retrieve the networkpolicies resources in the tenant namespace

kubectl --kubeconfig alice get networkpolicies

NAME POD-SELECTOR AGE

capsule-oil-0 <none> 7m5s

As a tenant, checks for permissions to manage networkpolicy for each verb

kubectl --kubeconfig alice auth can-i get networkpolicies

kubectl --kubeconfig alice auth can-i create networkpolicies

kubectl --kubeconfig alice auth can-i update networkpolicies

kubectl --kubeconfig alice auth can-i patch networkpolicies

kubectl --kubeconfig alice auth can-i delete networkpolicies

kubectl --kubeconfig alice auth can-i deletecollection networkpolicies

Each command must return ‘yes’

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Allow self-service management of Role Bindings

Profile Applicability: L2

Type: Behavioral

Category: Self-Service Operations

Description: Tenants should be able to perform self-service operations by creating their rolebindings in their namespaces.

Rationale: Enables self-service management of roles.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner check for permissions to manage rolebindings for each verb

kubectl --kubeconfig alice auth can-i get rolebindings

kubectl --kubeconfig alice auth can-i create rolebindings

kubectl --kubeconfig alice auth can-i update rolebindings

kubectl --kubeconfig alice auth can-i patch rolebindings

kubectl --kubeconfig alice auth can-i delete rolebindings

kubectl --kubeconfig alice auth can-i deletecollection rolebindings

Each command must return ‘yes’

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Allow self-service management of Roles

Profile Applicability: L2

Type: Behavioral

Category: Self-Service Operations

Description: Tenants should be able to perform self-service operations by creating their own roles in their namespaces.

Rationale: Enables self-service management of roles.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, check for permissions to manage roles for each verb

kubectl --kubeconfig alice auth can-i get roles

kubectl --kubeconfig alice auth can-i create roles

kubectl --kubeconfig alice auth can-i update roles

kubectl --kubeconfig alice auth can-i patch roles

kubectl --kubeconfig alice auth can-i delete roles

kubectl --kubeconfig alice auth can-i deletecollection roles

Each command must return ‘yes’

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Block access to cluster resources

Profile Applicability: L1

Type: Configuration Check

Category: Control Plane Isolation

Description: Tenants should not be able to view, edit, create or delete cluster (non-namespaced) resources such Node, ClusterRole, ClusterRoleBinding, etc.

Rationale: Access controls should be configured for tenants so that a tenant cannot list, create, modify or delete cluster resources

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As cluster admin, run the following command to retrieve the list of non-namespaced resources

kubectl --kubeconfig cluster-admin api-resources --namespaced=false

For all non-namespaced resources, and each verb (get, list, create, update, patch, watch, delete, and deletecollection) issue the following command:

kubectl --kubeconfig alice auth can-i <verb> <resource>

Each command must return no

Exception:

It should, but it does not:

kubectl --kubeconfig alice auth can-i create selfsubjectaccessreviews

yes

kubectl --kubeconfig alice auth can-i create selfsubjectrulesreviews

yes

kubectl --kubeconfig alice auth can-i create namespaces

yes

Any kubernetes user can create SelfSubjectAccessReview and SelfSubjectRulesReviews to checks whether he/she can act. First, two exceptions are not an issue.

kubectl --anyuser auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

To enable namespace self-service provisioning, Capsule intentionally gives permissions to create namespaces to all users belonging to the Capsule group:

kubectl describe clusterrolebindings capsule-namespace-provisioner

Name: capsule-namespace-provisioner

Labels: <none>

Annotations: <none>

Role:

Kind: ClusterRole

Name: capsule-namespace-provisioner

Subjects:

Kind Name Namespace

---- ---- ---------

Group capsule.clastix.io

kubectl describe clusterrole capsule-namespace-provisioner

Name: capsule-namespace-provisioner

Labels: <none>

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

namespaces [] [] [create]

Capsule controls self-service namespace creation by limiting the number of namespaces the user can create by the tenant.spec.namespaceQuota option.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Block access to multitenant resources

Profile Applicability: L1

Type: Behavioral

Category: Tenant Isolation

Description: Each tenant namespace may contain resources set up by the cluster administrator for multi-tenancy, such as role bindings, and network policies. Tenants should not be allowed to modify the namespaced resources created by the cluster administrator for multi-tenancy. However, for some resources such as network policies, tenants can configure additional instances of the resource for their workloads.

Rationale: Tenants can escalate privileges and impact other tenants if they can delete or modify required multi-tenancy resources such as namespace resource quotas or default network policy.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

networkPolicies:

items:

- podSelector: {}

policyTypes:

- Ingress

- Egress

- egress:

- to:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: oil

ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: oil

podSelector: {}

policyTypes:

- Egress

- Ingress

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, retrieve the networkpolicies resources in the tenant namespace

kubectl --kubeconfig alice get networkpolicies

NAME POD-SELECTOR AGE

capsule-oil-0 <none> 7m5s

capsule-oil-1 <none> 7m5s

As tenant owner try to modify or delete one of the networkpolicies

kubectl --kubeconfig alice delete networkpolicies capsule-oil-0

You should receive an error message denying the edit/delete request

Error from server (Forbidden): networkpolicies.networking.k8s.io "capsule-oil-0" is forbidden:

User "oil" cannot delete resource "networkpolicies" in API group "networking.k8s.io" in the namespace "oil-production"

As tenant owner, you can create an additional networkpolicy inside the namespace

kubectl create -f - << EOF

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: hijacking

namespace: oil-production

spec:

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0

podSelector: {}

policyTypes:

- Egress

EOF

However, due to the additive nature of networkpolicies, the DENY ALL policy set by the cluster admin, prevents hijacking.

As tenant owner list RBAC permissions set by Capsule

kubectl --kubeconfig alice get rolebindings

NAME ROLE AGE

capsule-oil-0-admin ClusterRole/admin 11h

capsule-oil-1-capsule-namespace-deleter ClusterRole/capsule-namespace-deleter 11h

As tenant owner, try to change/delete the rolebinding to escalate permissions

kubectl --kubeconfig alice edit/delete rolebinding capsule-oil-0-admin

The rolebinding is immediately recreated by Capsule:

kubectl --kubeconfig alice get rolebindings

NAME ROLE AGE

capsule-oil-0-admin ClusterRole/admin 2s

capsule-oil-1-capsule-namespace-deleter ClusterRole/capsule-namespace-deleter 11h

However, the tenant owner can create and assign permissions inside the namespace she owns

kubectl create -f - << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

name: oil-robot:admin

namespace: oil-production

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: admin

subjects:

- kind: ServiceAccount

name: default

namespace: oil-production

EOF

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Block access to other tenant resources

Profile Applicability: L1

Type: Behavioral

Category: Tenant Isolation

Description: Each tenant has its own set of resources, such as namespaces, service accounts, secrets, pods, services, etc. Tenants should not be allowed to access each other’s resources.

Rationale: Tenant’s resources must be not accessible by other tenants.

Audit:

As cluster admin, create a couple of tenants

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

and

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: gas

spec:

owners:

- kind: User

name: joe

EOF

./create-user.sh joe gas

As oil tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As gas tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig joe create ns gas-production

kubectl --kubeconfig joe config set-context --current --namespace gas-production

As oil tenant owner, try to retrieve the resources in the gas tenant namespaces

kubectl --kubeconfig alice get serviceaccounts --namespace gas-production

You must receive an error message:

Error from server (Forbidden): serviceaccount is forbidden:

User "oil" cannot list resource "serviceaccounts" in API group "" in the namespace "gas-production"

As gas tenant owner, try to retrieve the resources in the oil tenant namespaces

kubectl --kubeconfig joe get serviceaccounts --namespace oil-production

You must receive an error message:

Error from server (Forbidden): serviceaccount is forbidden:

User "joe" cannot list resource "serviceaccounts" in API group "" in the namespace "oil-production"

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenants oil gas

Block add capabilities

Profile Applicability: L1

Type: Behavioral Check

Category: Control Plane Isolation

Description: Control Linux capabilities.

Rationale: Linux allows defining fine-grained permissions using capabilities. With Kubernetes, it is possible to add capabilities for pods that escalate the level of kernel access and allow other potentially dangerous behaviors.

Audit:

As cluster admin, define a PodSecurityPolicy with allowedCapabilities and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

# The default set of capabilities are implicitly allowed

# The empty set means that no additional capabilities may be added beyond the default set

allowedCapabilities: []

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod and see new capabilities cannot be added in the tenant namespaces

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-settime-cap

namespace:

labels:

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

securityContext:

capabilities:

add:

- SYS_TIME

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block modification of resource quotas

Profile Applicability: L1

Type: Behavioral Check

Category: Tenant Isolation

Description: Tenants should not be able to modify the resource quotas defined in their namespaces

Rationale: Resource quotas must be configured for isolation and fairness between tenants. Tenants should not be able to modify existing resource quotas as they may exhaust cluster resources and impact other tenants.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

resourceQuotas:

items:

- hard:

limits.cpu: "8"

limits.memory: 16Gi

requests.cpu: "8"

requests.memory: 16Gi

- hard:

pods: "10"

services: "50"

- hard:

requests.storage: 100Gi

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, check the permissions to modify/delete the quota in the tenant namespace:

kubectl --kubeconfig alice auth can-i create quota

kubectl --kubeconfig alice auth can-i update quota

kubectl --kubeconfig alice auth can-i patch quota

kubectl --kubeconfig alice auth can-i delete quota

kubectl --kubeconfig alice auth can-i deletecollection quota

Each command must return ’no'

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Block network access across tenant namespaces

Profile Applicability: L1

Type: Behavioral

Category: Tenant Isolation

Description: Block network traffic among namespaces from different tenants.

Rationale: Tenants cannot access services and pods in another tenant’s namespaces.

Audit:

As cluster admin, create a couple of tenants

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

networkPolicies:

items:

- ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: oil

podSelector: {}

policyTypes:

- Ingress

EOF

./create-user.sh alice oil

and

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: gas

spec:

owners:

- kind: User

name: joe

networkPolicies:

items:

- ingress:

- from:

- namespaceSelector:

matchLabels:

capsule.clastix.io/tenant: gas

podSelector: {}

policyTypes:

- Ingress

EOF

./create-user.sh joe gas

As oil tenant owner, run the following commands to create a namespace and resources in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

kubectl --kubeconfig alice run webserver --image nginx:latest

kubectl --kubeconfig alice expose pod webserver --port 80

As gas tenant owner, run the following commands to create a namespace and resources in the given tenant

kubectl --kubeconfig joe create ns gas-production

kubectl --kubeconfig joe config set-context --current --namespace gas-production

kubectl --kubeconfig joe run webserver --image nginx:latest

kubectl --kubeconfig joe expose pod webserver --port 80

As oil tenant owner, verify you can access the service in oil tenant namespace but not in the gas tenant namespace

kubectl --kubeconfig alice exec webserver -- curl http://webserver.oil-production.svc.cluster.local

kubectl --kubeconfig alice exec webserver -- curl http://webserver.gas-production.svc.cluster.local

Viceversa, as gas tenant owner, verify you can access the service in gas tenant namespace but not in the oil tenant namespace

kubectl --kubeconfig alice exec webserver -- curl http://webserver.oil-production.svc.cluster.local

kubectl --kubeconfig alice exec webserver -- curl http://webserver.gas-production.svc.cluster.local

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenants oil gas

Block privilege escalation

Profile Applicability: L1

Type: Behavioral Check

Category: Control Plane Isolation

Description: Control container permissions.

Rationale: The security allowPrivilegeEscalation setting allows a process to gain more privileges from its parent process. Processes in tenant containers should not be allowed to gain additional privileges.

Audit:

As cluster admin, define a PodSecurityPolicy that sets allowPrivilegeEscalation=false and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod or container that sets allowPrivilegeEscalation=true in its securityContext.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-priviliged-mode

namespace: oil-production

labels:

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

securityContext:

allowPrivilegeEscalation: true

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block privileged containers

Profile Applicability: L1

Type: Behavioral Check

Category: Control Plane Isolation

Description: Control container permissions.

Rationale: By default a container is not allowed to access any devices on the host, but a “privileged” container can access all devices on the host. A process within a privileged container can also get unrestricted host access. Hence, tenants should not be allowed to run privileged containers.

Audit:

As cluster admin, define a PodSecurityPolicy that sets privileged=false and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod or container that sets privileges in its securityContext.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-priviliged-mode

namespace:

labels:

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

securityContext:

privileged: true

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block use of existing PVs

Profile Applicability: L1

Type: Configuration Check

Category: Data Isolation

Description: Avoid a tenant to mount existing volumes`.

Rationale: Tenants have to be assured that their Persistent Volumes cannot be reclaimed by other tenants.

Audit:

As cluster admin, create a tenant

kubectl create -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As tenant owner, check if you can access the persistent volumes

kubectl --kubeconfig alice auth can-i get persistentvolumes

kubectl --kubeconfig alice auth can-i list persistentvolumes

kubectl --kubeconfig alice auth can-i watch persistentvolumes

You must receive for all the requests ’no'.

Block use of host IPC

Profile Applicability: L1

Type: Behavioral Check

Category: Host Isolation

Description: Tenants should not be allowed to share the host’s inter-process communication (IPC) namespace.

Rationale: The hostIPC setting allows pods to share the host’s inter-process communication (IPC) namespace allowing potential access to host processes or processes belonging to other tenants.

Audit:

As cluster admin, define a PodSecurityPolicy that restricts hostIPC usage and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

hostIPC: false

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod mounting the host IPC namespace.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-host-ipc

namespace: oil-production

spec:

hostIPC: true

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block use of host networking and ports

Profile Applicability: L1

Type: Behavioral Check

Category: Host Isolation

Description: Tenants should not be allowed to use host networking and host ports for their workloads.

Rationale: Using hostPort and hostNetwork allows tenants workloads to share the host networking stack allowing potential snooping of network traffic across application pods.

Audit:

As cluster admin, define a PodSecurityPolicy that restricts hostPort and hostNetwork and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

hostNetwork: false

hostPorts: [] # empty means no allowed host ports

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod using hostNetwork

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-hostnetwork

namespace: oil-production

spec:

hostNetwork: true

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

EOF

As tenant owner, create a pod defining a container using hostPort

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-hostport

namespace: oil-production

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

hostPort: 9090

EOF

In both the cases above, you must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block use of host path volumes

Profile Applicability: L1

Type: Behavioral Check

Category: Host Protection

Description: Tenants should not be able to mount host volumes and directories.

Rationale: The use of host volumes and directories can be used to access shared data or escalate privileges and also creates a tight coupling between a tenant workload and a host.

Audit:

As cluster admin, define a PodSecurityPolicy that restricts hostPath volumes and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

volumes: # hostPath is not permitted

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

- 'persistentVolumeClaim'

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod defining a volume of type hostpath.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-hostpath-volume

namespace: oil-production

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

volumeMounts:

- mountPath: /tmp

name: volume

volumes:

- name: volume

hostPath:

# directory location on host

path: /data

type: Directory

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block use of host PID

Profile Applicability: L1

Type: Behavioral Check

Category: Host Isolation

Description: Tenants should not be allowed to share the host process ID (PID) namespace.

Rationale: The hostPID setting allows pods to share the host process ID namespace allowing potential privilege escalation. Tenant pods should not be allowed to share the host PID namespace.

Audit:

As cluster admin, define a PodSecurityPolicy that restricts hostPID usage and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

hostPID: false

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod mounting the host PID namespace.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-host-pid

namespace: oil-production

spec:

hostPID: true

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Block use of NodePort services

Profile Applicability: L1

Type: Behavioral Check

Category: Host Isolation

Description: Tenants should not be able to create services of type NodePort.

Rationale: the service type NodePorts configures host ports that cannot be secured using Kubernetes network policies and require upstream firewalls. Also, multiple tenants cannot use the same host port numbers.

Audit:

As cluster admin, create a tenant

kubectl create -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

serviceOptions:

allowedServices:

nodePort: false

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, creates a service in the tenant namespace having service type of NodePort

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

namespace: oil-production

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 80

selector:

run: nginx

type: NodePort

EOF

You must receive an error message denying the request:

Error from server

Error from server (NodePort service types are forbidden for the tenant:

error when creating "STDIN": admission webhook "services.capsule.clastix.io" denied the request:

NodePort service types are forbidden for the tenant: please, reach out to the system administrators

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Configure namespace object limits

Profile Applicability: L1

Type: Configuration

Category: Fairness

Description: Namespace resource quotas should be used to allocate, track and limit the number of objects, of a particular type, that can be created within a namespace.

Rationale: Resource quotas must be configured for each tenant namespace, to guarantee isolation and fairness across tenants.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

resourceQuotas:

items:

- hard:

pods: 100

services: 50

services.loadbalancers: 3

services.nodeports: 20

persistentvolumeclaims: 100

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, retrieve the configured quotas in the tenant namespace:

kubectl --kubeconfig alice get quota

NAME AGE REQUEST LIMIT

capsule-oil-0 23s persistentvolumeclaims: 0/100,

pods: 0/100, services: 0/50,

services.loadbalancers: 0/3,

services.nodeports: 0/20

Make sure that a quota is configured for API objects: PersistentVolumeClaim, LoadBalancer, NodePort, Pods, etc

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Configure namespace resource quotas

Profile Applicability: L1

Type: Configuration

Category: Fairness

Description: Namespace resource quotas should be used to allocate, track, and limit a tenant’s use of shared resources.

Rationale: Resource quotas must be configured for each tenant namespace, to guarantee isolation and fairness across tenants.

Audit:

As cluster admin, create a tenant

kubectl create -f - <<EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

resourceQuotas:

items:

- hard:

limits.cpu: "8"

limits.memory: 16Gi

requests.cpu: "8"

requests.memory: 16Gi

- hard:

requests.storage: 100Gi

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, retrieve the configured quotas in the tenant namespace:

kubectl --kubeconfig alice get quota

NAME AGE REQUEST LIMIT

capsule-oil-0 24s requests.cpu: 0/8, requests.memory: 0/16Gi limits.cpu: 0/8, limits.memory: 0/16Gi

capsule-oil-1 24s requests.storage: 0/10Gi

Make sure that a quota is configured for CPU, memory, and storage resources.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Require always imagePullPolicy

Profile Applicability: L1

Type: Configuration Check

Category: Data Isolation

Description: Set the image pull policy to Always for tenant workloads.

Rationale: Tenants have to be assured that their private images can only be used by those who have the credentials to pull them.

Audit:

As cluster admin, create a tenant

kubectl create -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

imagePullPolicies:

- Always

owners:

- kind: User

name: alice

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, creates a pod in the tenant namespace having imagePullPolicies=IfNotPresent

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: oil-production

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

EOF

You must receive an error message denying the request:

Error from server

(ImagePullPolicy IfNotPresent for container nginx is forbidden, use one of the followings: Always): error when creating "STDIN": admission webhook "pods.capsule.clastix.io" denied the request:

ImagePullPolicy IfNotPresent for container nginx is forbidden, use one of the followings: Always

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

Require PersistentVolumeClaim for storage

Profile Applicability: L1

Type: Behavioral Check

Category: na

Description: Tenants should not be able to use all volume types except PersistentVolumeClaims.

Rationale: In some scenarios, it would be required to disallow usage of any core volume types except PVCs.

Audit:

As cluster admin, define a PodSecurityPolicy allowing only PersistentVolumeClaim volumes and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

volumes:

- 'persistentVolumeClaim'

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

namespace: oil-production

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod defining a volume of any of the core type except PersistentVolumeClaim. For example:

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-with-hostpath-volume

namespace: oil-production

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

volumeMounts:

- mountPath: /tmp

name: volume

volumes:

- name: volume

hostPath:

# directory location on host

path: /data

type: Directory

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

Require PV reclaim policy of delete

Profile Applicability: L1

Type: Configuration Check

Category: Data Isolation

Description: Force a tenant to use a Storage Class with reclaimPolicy=Delete.

Rationale: Tenants have to be assured that their Persistent Volumes cannot be reclaimed by other tenants.

Audit:

As cluster admin, create a Storage Class with reclaimPolicy=Delete

kubectl create -f - << EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: delete-policy

reclaimPolicy: Delete

provisioner: clastix.io/nfs

EOF

As cluster admin, create a tenant and assign the above Storage Class

kubectl create -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

storageClasses:

allowed:

- delete-policy

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, creates a Persistent Volume Claim in the tenant namespace missing the Storage Class or using any other Storage Class:

kubectl --kubeconfig alice apply -f - << EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc

namespace: oil-production

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 12Gi

EOF

You must receive an error message denying the request:

Error from server (A valid Storage Class must be used, one of the following (delete-policy)):

error when creating "STDIN": admission webhook "pvc.capsule.clastix.io" denied the request:

A valid Storage Class must be used, one of the following (delete-policy)

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete storageclass delete-policy

Require run as non-root user

Profile Applicability: L1

Type: Behavioral Check

Category: Control Plane Isolation

Description: Control container permissions.

Rationale: Processes in containers run as the root user (uid 0), by default. To prevent potential compromise of container hosts, specify a least-privileged user ID when building the container image and require that application containers run as non-root users.

Audit:

As cluster admin, define a PodSecurityPolicy with runAsUser=MustRunAsNonRoot and map the policy to a tenant:

kubectl create -f - << EOF

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: tenant

spec:

privileged: false

# Required to prevent escalations to root.

allowPrivilegeEscalation: false

runAsUser:

# Require the container to run without root privileges.

rule: MustRunAsNonRoot

supplementalGroups:

rule: MustRunAs

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

fsGroup:

rule: MustRunAs

ranges:

# Forbid adding the root group.

- min: 1

max: 65535

EOF

Note: make sure

PodSecurityPolicyAdmission Control is enabled on the APIs server:--enable-admission-plugins=PodSecurityPolicy

Then create a ClusterRole using or granting the said item

kubectl create -f - << EOF

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tenant:psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

resourceNames: ['tenant']

verbs: ['use']

EOF

And assign it to the tenant

kubectl apply -f - << EOF

apiVersion: capsule.clastix.io/v1beta2

kind: Tenant

metadata:

name: oil

spec:

owners:

- kind: User

name: alice

additionalRoleBindings:

- clusterRoleName: tenant:psp

subjects:

- kind: "Group"

apiGroup: "rbac.authorization.k8s.io"

name: "system:authenticated"

EOF

./create-user.sh alice oil

As tenant owner, run the following command to create a namespace in the given tenant

kubectl --kubeconfig alice create ns oil-production

kubectl --kubeconfig alice config set-context --current --namespace oil-production

As tenant owner, create a pod or container that does not set runAsNonRoot to true in its securityContext, and runAsUser must not be set to 0.

kubectl --kubeconfig alice apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: pod-run-as-root

namespace: oil-production

spec:

containers:

- name: busybox

image: busybox:latest

command: ["/bin/sleep", "3600"]

EOF

You must have the pod blocked by PodSecurityPolicy.

Cleanup: As cluster admin, delete all the created resources

kubectl --kubeconfig cluster-admin delete tenant oil

kubectl --kubeconfig cluster-admin delete PodSecurityPolicy tenant

kubectl --kubeconfig cluster-admin delete ClusterRole tenant:psp

3 - Operating

3.1 - Setup

3.1.1 - Installation

Requirements

- Helm 3 is required when installing the Capsule Operator chart. Follow Helm’s official for installing helm on your particular operating system.

- A Kubernetes cluster 1.16+ with following Admission Controllers enabled:

- PodNodeSelector

- LimitRanger

- ResourceQuota

- MutatingAdmissionWebhook

- ValidatingAdmissionWebhook

- A Kubeconfig file accessing the Kubernetes cluster with cluster admin permissions.

- Cert-Manager is recommended but not required

Installation

We officially only support the installation of Capsule using the Helm chart. The chart itself handles the Installation/Upgrade of needed CustomResourceDefinitions. The following Artifacthub repository are official:

Perform the following steps to install the capsule Operator:

Add repository:

helm repo add projectcapsule https://projectcapsule.github.io/chartsInstall Capsule:

helm install capsule projectcapsule/capsule --version 0.12.4 -n capsule-system --create-namespaceor (OCI)

helm install capsule oci://ghcr.io/projectcapsule/charts/capsule --version 0.12.4 -n capsule-system --create-namespaceShow the status:

helm status capsule -n capsule-systemUpgrade the Chart

helm upgrade capsule projectcapsule/capsule -n capsule-systemor (OCI)

helm upgrade capsule oci://ghcr.io/projectcapsule/charts/capsule --version 0.12.4Uninstall the Chart

helm uninstall capsule -n capsule-system

Considerations

Here are some key considerations to keep in mind when installing Capsule. Also check out the Best Practices for more information.

Admission Policies

While Capsule provides a robust framework for managing multi-tenancy in Kubernetes, it does not include built-in admission policies for enforcing specific security or operational standards for all possible aspects of a Kubernetes cluster. We provide additional policy recommendations here.

Certificate Management

We recommend using cert-manager to manage the TLS certificates for Capsule. This will ensure that your Capsule installation is secure and that the certificates are automatically renewed. Capsule requires a valid TLS certificate for it’s Admission Webserver. By default Capsule reconciles it’s own TLS certificate. To use cert-manager, you can set the following values:

certManager:

generateCertificates: true

tls:

enableController: false

create: false

Webhooks

Capsule makes use of webhooks for admission control. Ensure that your cluster supports webhooks and that they are properly configured. The webhooks are automatically created by Capsule during installation. However some of these webhooks will cause problems when capsule is not running (this is especially problematic in single-node clusters). Here are the webhooks you need to watch out for.

Generally we recommend to use matchconditions for all the webhooks to avoid problems when Capsule is not running. You should exclude your system critical components from the Capsule webhooks. For namespaced resources (pods, services, etc.) the webhooks all select only namespaces which are part of a Capsule Tenant. If your system critical components are not part of a Capsule Tenant, they will not be affected by the webhooks. However, if you have system critical components which are part of a Capsule Tenant, you should exclude them from the Capsule webhooks by using matchconditions as well or add more specific namespaceselectors/objectselectors to exclude them. This can also be considered to improve performance.

The Webhooks below are the most important ones to consider.

Nodes

There is a webhook which catches interactions with the Node resource. This Webhook is mainly interesting, when you make use of Node Metadata. In any other case it will just case you problems. By default the webhook is disabled, but you can enabled it by setting the following value:

webhooks:

hooks:

nodes:

enabled: true

Or you could at least consider to set the failure policy to Ignore, if you don’t want to disrupt critical nodes:

webhooks:

hooks:

nodes:

failurePolicy: Ignore

If you still want to use the feature, you could execlude the kube-system namespace (or any other namespace you want to exclude) from the webhook by setting the following value:

webhooks:

hooks:

nodes:

matchConditions:

- name: 'exclude-kubelet-requests'

expression: '!("system:nodes" in request.userInfo.groups)'

- name: 'exclude-kube-system'

expression: '!("system:serviceaccounts:kube-system" in request.userInfo.groups)'

Namespaces

Namespaces are the most important resource in Capsule. The Namespace Webhook is responsible for enforcing the Capsule Tenant boundaries. It is enabled by default and should not be disabled. However, you may change the matchConditions to execlude certain namespaces from the Capsule Tenant boundaries. For example, you can exclude the kube-system namespace by setting the following value:

webhooks:

hooks:

namespaces:

matchConditions:

- name: 'exclude-kube-system'

expression: '!("system:serviceaccounts:kube-system" in request.userInfo.groups)'

GitOps

There are no specific requirements for using Capsule with GitOps tools like ArgoCD or FluxCD. You can manage Capsule resources as you would with any other Kubernetes resource.

ArgoCD

Manifests to get you started with ArgoCD. For ArgoCD you might need to skip the validation of the CapsuleConfiguration resources, otherwise there might be errors on the first install:

Information

TheValidate=false option is required for the CapsuleConfiguration resource, because ArgoCD tries to validate the resource before the Capsule CRDs are installed via our CRD Lifecycle hook. Upstream Issue. This has mainly been observed in ArgoCD Applications using Service-Side Diff/Apply.manager:

options:

annotations:

argocd.argoproj.io/sync-options: "Validate=false,SkipDryRunOnMissingResource=true"

---

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: capsule

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: system

source:

repoURL: ghcr.io/projectcapsule/charts

targetRevision: 0.12.4

chart: capsule

helm:

valuesObject:

crds:

install: true

certManager:

generateCertificates: true

tls:

enableController: false

create: false

manager:

options:

annotations:

argocd.argoproj.io/sync-options: "Validate=false,SkipDryRunOnMissingResource=true"

capsuleConfiguration: default

ignoreUserGroups:

- oidc:administators

capsuleUserGroups:

- oidc:kubernetes-users

- system:serviceaccounts:capsule-argo-addon

monitoring:

dashboards:

enabled: true

serviceMonitor:

enabled: true

annotations:

argocd.argoproj.io/sync-options: SkipDryRunOnMissingResource=true

proxy:

enabled: true

webhooks:

enabled: true

certManager:

generateCertificates: true

options:

generateCertificates: false

oidcUsernameClaim: "email"

extraArgs:

- "--feature-gates=ProxyClusterScoped=true"

- "--feature-gates=ProxyAllNamespaced=true"

serviceMonitor:

enabled: true

annotations:

argocd.argoproj.io/sync-options: SkipDryRunOnMissingResource=true

destination:

server: https://kubernetes.default.svc

namespace: capsule-system

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- ServerSideApply=true

- CreateNamespace=true

- PrunePropagationPolicy=foreground

- PruneLast=true

- RespectIgnoreDifferences=true

retry:

limit: 5

backoff:

duration: 5s

factor: 2

maxDuration: 3m

---

apiVersion: v1

kind: Secret

metadata:

name: capsule-repo

namespace: argocd

labels:

argocd.argoproj.io/secret-type: repository

stringData:

url: ghcr.io/projectcapsule/charts

name: capsule

project: system

type: helm

enableOCI: "true"

FluxCD

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: capsule

namespace: flux-system

spec:

serviceAccountName: kustomize-controller

targetNamespace: "capsule-system"

interval: 10m

releaseName: "capsule"

chart:

spec:

chart: capsule

version: "0.12.4"

sourceRef:

kind: HelmRepository

name: capsule

interval: 24h

install:

createNamespace: true

upgrade:

remediation:

remediateLastFailure: true

driftDetection:

mode: enabled

values:

crds:

install: true

certManager:

generateCertificates: true

tls:

enableController: false

create: false

manager:

options:

capsuleConfiguration: default

ignoreUserGroups:

- oidc:administators

capsuleUserGroups:

- oidc:kubernetes-users

- system:serviceaccounts:capsule-argo-addon

monitoring:

dashboards:

enabled: true

serviceMonitor:

enabled: true

proxy:

enabled: true

webhooks:

enabled: true

certManager:

generateCertificates: true

options:

generateCertificates: false

oidcUsernameClaim: "email"

extraArgs:

- "--feature-gates=ProxyClusterScoped=true"

- "--feature-gates=ProxyAllNamespaced=true"

---

apiVersion: source.toolkit.fluxcd.io/v1

kind: HelmRepository

metadata:

name: capsule

namespace: flux-system

spec:

type: "oci"

interval: 12h0m0s

url: oci://ghcr.io/projectcapsule/charts

Security

Signature

To verify artifacts you need to have cosign installed. This guide assumes you are using v2.x of cosign. All of the signatures are created using keyless signing. You can set the environment variable COSIGN_REPOSITORY to point to this repository. For example:

# Docker Image

export COSIGN_REPOSITORY=ghcr.io/projectcapsule/capsule

# Helm Chart

export COSIGN_REPOSITORY=ghcr.io/projectcapsule/charts/capsule

To verify the signature of the docker image, run the following command. Replace <release_tag> with an available release tag:

COSIGN_REPOSITORY=ghcr.io/projectcapsule/charts/capsule cosign verify ghcr.io/projectcapsule/capsule:<release_tag> \

--certificate-identity-regexp="https://github.com/projectcapsule/capsule/.github/workflows/docker-publish.yml@refs/tags/*" \

--certificate-oidc-issuer="https://token.actions.githubusercontent.com" | jq

To verify the signature of the helm image, run the following command. Replace <release_tag> with an available release tag:

COSIGN_REPOSITORY=ghcr.io/projectcapsule/charts/capsule cosign verify ghcr.io/projectcapsule/charts/capsule:<release_tag> \

--certificate-identity-regexp="https://github.com/projectcapsule/capsule/.github/workflows/helm-publish.yml@refs/tags/*" \

--certificate-oidc-issuer="https://token.actions.githubusercontent.com" | jq

Provenance

Capsule creates and attests to the provenance of its builds using the SLSA standard and meets the SLSA Level 3 specification. The attested provenance may be verified using the cosign tool.

Verify the provenance of the docker image. Replace <release_tag> with an available release tag

cosign verify-attestation --type slsaprovenance \

--certificate-identity-regexp="https://github.com/slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@refs/tags/*" \

--certificate-oidc-issuer="https://token.actions.githubusercontent.com" \

ghcr.io/projectcapsule/capsule:<release_tag> | jq .payload -r | base64 --decode | jq

Verify the provenance of the helm image. Replace <release_tag> with an available release tag

cosign verify-attestation --type slsaprovenance \

--certificate-identity-regexp="https://github.com/slsa-framework/slsa-github-generator/.github/workflows/generator_container_slsa3.yml@refs/tags/*" \

--certificate-oidc-issuer="https://token.actions.githubusercontent.com" \

ghcr.io/projectcapsule/charts/capsule:<release_tag> | jq .payload -r | base64 --decode | jq

Software Bill of Materials (SBOM)

An SBOM (Software Bill of Materials) in CycloneDX JSON format is published for each release, including pre-releases. You can set the environment variable COSIGN_REPOSITORY to point to this repository. For example:

# Docker Image

export COSIGN_REPOSITORY=ghcr.io/projectcapsule/capsule

# Helm Chart

export COSIGN_REPOSITORY=ghcr.io/projectcapsule/charts/capsule

To inspect the SBOM of the docker image, run the following command. Replace <release_tag> with an available release tag:

COSIGN_REPOSITORY=ghcr.io/projectcapsule/capsule cosign download sbom ghcr.io/projectcapsule/capsule:<release_tag>

To inspect the SBOM of the helm image, run the following command. Replace <release_tag> with an available release tag:

COSIGN_REPOSITORY=ghcr.io/projectcapsule/charts/capsule cosign download sbom ghcr.io/projectcapsule/charts/capsule:<release_tag>

Compatibility